Stanford AI Lab builds an algorithm to help teachers grade coding homework

Teachers, rejoice! Soon, an algorithm could help grade your students’ assignments using artificial intelligence.

Over the last decade, AI algorithms have generated headlines around the world. They’re starting to impact many aspects of our daily life, bringing drastic changes to our world — from beating world Go champion Lee Sedol, to being embedded in everyone’s mobile devices as smart assistants like Siri and Alexa.

But what can AI do for education and teachers? A team at Stanford AI Lab led by Professor Chris Piech believes that the AI algorithm that formed the backbone of mastering the game of Go can be used to help online education and can significantly improve student learning.

While computer science is one of the fastest-growing industries, just over 50% of high schools offer computer science classes. Many free online programs can only support students doing simple types of programs that can be automatically checked by providing simple input and checking for desired output. Popular, exciting computer science programming often involves creating software that supports interaction between people and computers, like video games, shopping services, or other interactive applications.

But so far, there’s no automatic way to grade such interactive programs, which means they’re often not part of the curriculum and students lose out. In order to make high-quality CS education available for all students, even in regions where coding education wasn’t provided in their schools, providing scalable automated grading on all types of coding assignments is the first step to providing intelligent, personalized feedback to students.

The challenge of grading coding assignments

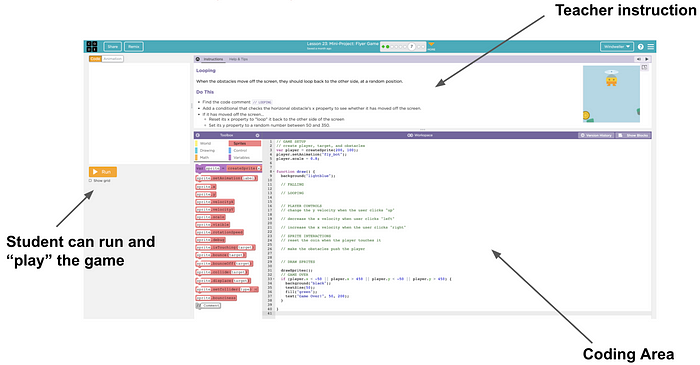

Code.org provides many game development and app design assignments in their curriculum. In these assignments, students write JavaScript programs in a code editor embedded in the web browser. Game assignments are great to examine student’s progress: Students not only need to grasp core concepts like if-conditionals and for-loops but use these concepts to write the physical rules of the game world — calculate the trajectories of objects, resolve inelastic collision of two objects, and keep track of game states.

Automated grading on the code text alone can be an incredibly hard challenge, even for introductory-level computer science assignments. Why?

- Two solutions that are only slightly different in text can have very different behaviors and two solutions that are written in very different ways can have the same behaviors. As such, some models that people develop for grading code can be as complex as those used to understand paragraphs of natural language.

- Some assignments allow students to write in different programming languages, which creates another layer of difficulty and vulnerability for exploits. What if the algorithm is more accurate grading Java programs than grading C++ programs?

- Because of the constant introduction of new assignments, grading is necessary for the first student working on an assignment, not just the millionth — the “collect-data, train, deploy” cycle isn’t suitable in this context. Supervised machine learning for things like automated visual object recognition has been extremely powerful but typically relies on having access to enormous amounts of data labeled with each object. In contrast, for programming assignments it is not possible to collect a massive amount of labeled datasets to train a fully supervised learning algorithm for each and every assignment.

Making the grade

Stanford Computer Science PhD student Allen Nie, along with Professor Emma Brunskill and Professor Chris Piech, developed a solution: Play-to-Grade, which they call the “first step” at solving this incredibly difficult problem. It’s described in their recent paper, published in a top machine learning conference, Neural Information Processing Systems.

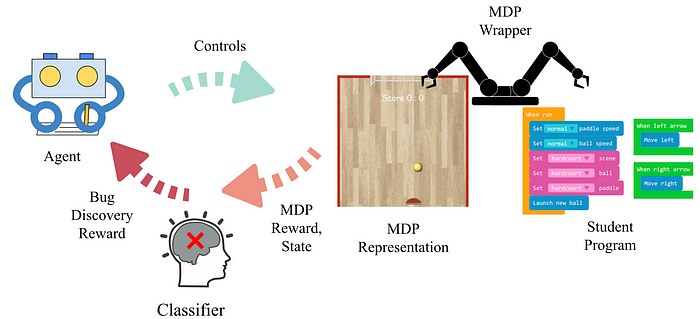

The Play-to-Grade method uses a popular technique in artificial intelligence, reinforcement learning, to train an agent that learns to play the teacher’s correct implementation of the game. During the playing, this agent will memorize its experience in this game, including how the objects move, the rules of the game, and how things should interact with each other.

The agent learns to recognize that all three student implementations of the game shown above are, in fact, incorrect! One program’s wall does not allow the ball to bounce on it. Another program’s goal post does not let the ball go through the post. The last program gives the player one score point whenever it bounces on any object.

With help from Code.org, the Stanford AI team was able to verify their algorithm’s performance on a massive amount of unlabeled, ungraded student submissions. The game Bounce, from Code.org’s Course3 for students in 4th and 5th grades, provides a real-life dataset of what variations of different bugs and behaviors in student programs should look like. The dataset is compiled of 453,211 students who made an attempt on this assignment. In total, this dataset consists of 711,274 programs.

On this dataset, Play-to-Grade can grade 94% of the programs correctly, while the other comparable methods can only reach 68%-70%. With the public release of this dataset, the Stanford AI team hopes to open up this research space for more future work.

Developing AI-based solutions for grading student homework can scale up the capability of online coding education platforms, increase engagement of students, and lessen the burden of teachers on grading. The Stanford AI Team hopes to further expand this algorithm’s capability to accommodate more types of coding assignments by investigating and studying various kinds of student creativity. This algorithm could usher in a new era of online education platform course/assignment design: It expands the current capability of grading, removing constraints on what type of assignment online platforms can host and create, and it expands possibilities for all who want to learn computer science!

— Allen Nie, Emma Brunskill, and Chris Piech of Stanford AI Lab